TinyLlama 1.1B: Compact Model for Low-End PCs

Introducing TinyLlama 1.1B, a compact model based on Llama 2 architecture, allowing for versatile applications with low-end PCs.

00:00:00 Introducing TinyLlama 1.1B, a compact model based on Llama 2 architecture, allowing for versatile applications with low-end PCs. Learn how to install and explore its training details.

🦙 Introducing the TinyLlama 1.1B model, a compact version of the Lama 2 model with 1.1 billion parameters.

⚡️ TinyLlama allows for heavy computational and memory usage, making it suitable for various applications.

🔧 The video provides instructions on how to install the TinyLlama model and explores its training details and potential use cases.

00:01:33 Introducing the TinyLlama 1.1B language model, trained on a massive 3 trillion token dataset. Learn about its architecture and capabilities in this installation tutorial.

🦙 TinyLlama 1.1B is a language model with 1.1 billion parameters trained on 3 trillion tokens in just 90 days.

📚 The architecture of TinyLlama emphasizes its true capabilities and usability.

🔗 Seamless integration is one of the key features of TinyLlama.

00:03:08 The video introduces TinyLlama, a model with 1.1 billion parameters that can be easily substituted for larger Llama models in open source projects.

🔌 Tiny Llama can be easily plugged into open source projects built upon Llama, allowing users to run fine-tuned models with smaller resources.

💾 Tiny Llama is a compact model with 1.1 billion parameters, which can be substituted for larger models to experience running different sizes of models.

🖥️ Users with lower-spec computers can utilize Tiny Llama to run larger models and explore their capabilities with the plug-and-play feature.

00:04:43 3 trillion tokens sourced from a combination of data sets. Uses advanced optimization techniques for faster and efficient processing. Achieves high model flop utilization without activation checkpoints. Reduces memory footprint. Trains TinyLlama on different GPUs.

📊 The video discusses the creation of a three trillion token data set for TinyLlama.

⚡ Advanced optimization techniques were used to make TinyLlama faster and more efficient.

💾 The optimizations also reduced the memory footprint of TinyLlama.

00:06:18 This video introduces the TinyLlama 1.1B model and its installation tutorial, showcasing its efficient RAM utilization and comparison to other models.

📊 The 4-bit quantized TinyLlama 1.1 billion weight only occupies 550 MB of RAM, showcasing its efficiency.

🗂️ Intermediate checkpoints are released to compare TinyLlama's performance against other models.

🛠️ To install, first download the text generation web UI and then start it up with the start chat model.

00:07:53 Learn how to install the new LLAMA model on the text generation web UI, chat with it, and understand the cross entropy loss metric.

📥 The video demonstrates how to install the TinyLlama model using Pinocchio or the GitHub installation method.

🔗 To install the model, users need to copy the model card link from Hugging Phase, paste it in the text generation web UI, and click download.

📈 The video also discusses the cross entropy loss, which is a metric used for training language models to evaluate the model's predictions against the target values.

00:09:28 A tutorial on installing the new smaller model of the TinyLlama AI. Recommended for maximizing its potential.

🦙 The video introduces a new llama model with a smaller parameter size that can be run on personal computers.

💻 This new model is compatible with many different types of computers and allows users to fully explore the potential of llama models.

🔗 Links to the project, Discord, Patreon, and Twitter pages are provided in the video description for further exploration.

You might also like...

Read more on Science & Technology

🔥 AI Specialist Course 2023 | AI Specialist Training For 2023 | AI Basics In 9 Hours | Simplilearn

I used 1980s technology for a week

Lecture 11 – Semantic Parsing | Stanford CS224U: Natural Language Understanding | Spring 2019

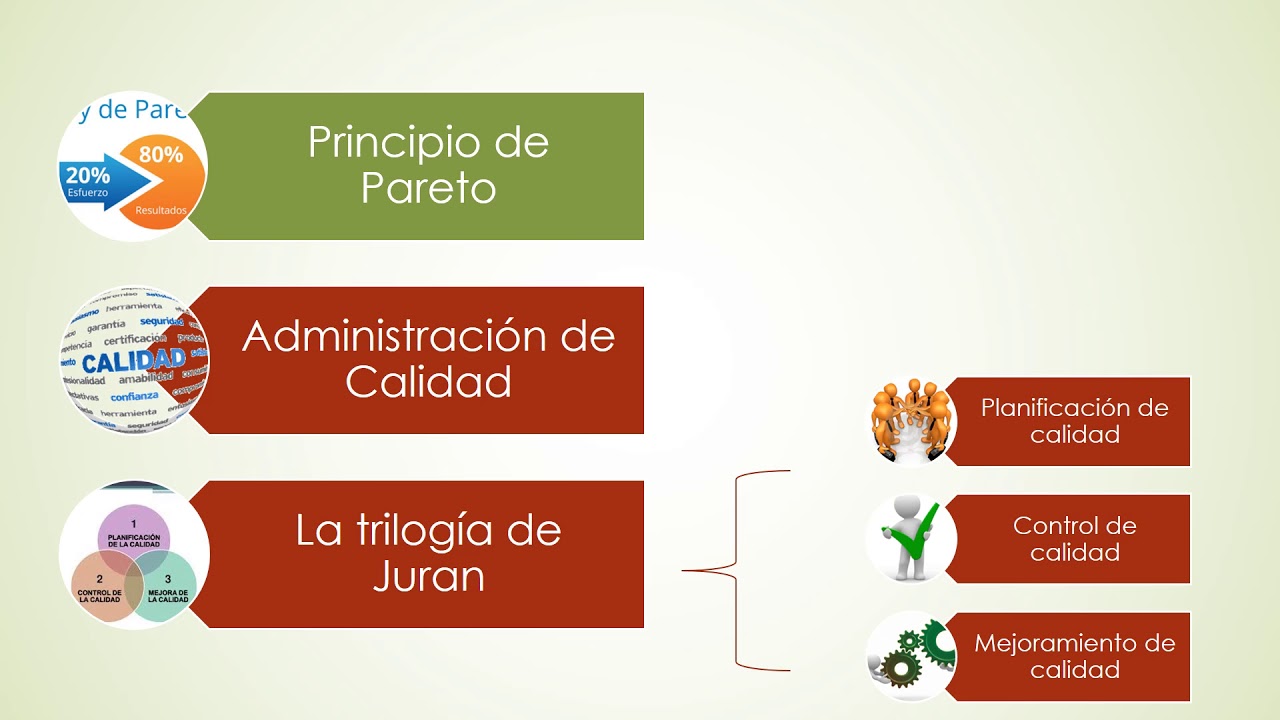

Joseph Juran Filosofía de la calidad

Excellence Series | Personality Development: Episode 2 | What Determines Our Personality?

DESCARGAR E INSTALAR OFFICE 2023 CON LICENCIA