Introduction to Offline Reinforcement Learning with Linear Function Approximation

This lecture explains offline model-based reinforcement learning using linear function approximation and least squares.

00:00:01 This lecture covers the use of linear fitted value functions in offline reinforcement learning. Although not commonly used today, understanding these methods provides valuable insights for future algorithm development and analysis.

📚 Understanding linear fitted value functions is valuable for analyzing and developing deep offline reinforcement learning methods.

🔎 Classical offline value function estimation extends existing ideas for approximate dynamic programming and q learning using simple function approximators.

🔬 Current research focuses on deriving approximate solutions with neural nets as function approximators, with the primary challenge being distributional shift.

00:03:07 This video explains how to do offline model-based reinforcement learning using linear function approximation and least squares. It discusses estimating rewards and transitions in terms of features and solving least square equations to recover the value function.

🔑 Offline model-based reinforcement learning can be performed in the feature space.

💡 Linear function approximation is used to estimate the reward and transitions based on the features.

🧩 The least square solution is used to solve for the weight vector, which approximates the true reward.

00:06:12 This lecture discusses the concept of transition models in offline reinforcement learning and how to approximate the effect of the real transition matrix on the features.

Offline Reinforcement Learning in a sample-based setting.

Transition models describe how features in the present become features in the future.

Policy-specific transition matrices are used in policy evaluation and policy improvement.

00:09:18 CS 285: Lecture 15, Part 3: Offline Reinforcement Learning. The video discusses the vector-valued version of the Bellman equation and how to solve it using linear equations.

📝 The value function in reinforcement learning can be represented as a matrix multiplied by a vector of weights.

🔢 The vector-valued version of the Bellman equation can be written as a linear equation, where the value function is the solution.

💡 The value function can be recovered as a solution to a system of linear equations, even in feature space.

00:12:25 Explains the least squares temporal difference formula, and how to apply it for offline reinforcement learning using samples instead of knowing the transition matrix and reward vector.

🔑 Least Squares Temporal Difference (LSTD) is a formula in classic reinforcement learning that relates a transition matrix and reward vector to the weights on the value function.

🔍 Replacing the need for complete knowledge of transition matrix and reward vector, LSTD can be solved using samples from an offline dataset.

⚙️ The empirical MDP, induced by the empirical samples, allows for a sample-wise estimate using the same equation.

00:15:31 In this lecture, we introduce the concept of offline reinforcement learning and propose a method called Least Squares Policy Iteration (LSPI) for estimating the Q-function. LSPI allows us to estimate the value of a policy using previously collected data, without requiring knowledge of the dynamics of the environment.

🔑 Instead of estimating the reward and transition explicitly, we directly estimate the value function using samples and improve the policy.

🔄 We repeat the process of estimating the value function by recovering the greedy policy under the estimated value function.

🎯 For offline reinforcement learning, we estimate the Q function instead of the value function using state-action features.

00:18:36 Offline Reinforcement Learning using featurization and iterative policy updates to address distributional shift problem in approximate dynamic programming methods.

📚 Featurizing actions in reinforcement learning.

💡 Phi prime depends on the policy pi.

⚙️ The distributional shift problem in offline RL.

You might also like...

Read more on Science & Technology

What is ChatGPT and how you are using it wrong!

Convocação para estarmos em Mogi Mirim - 18 de agosto as 11h na Secretaria de Segurança!

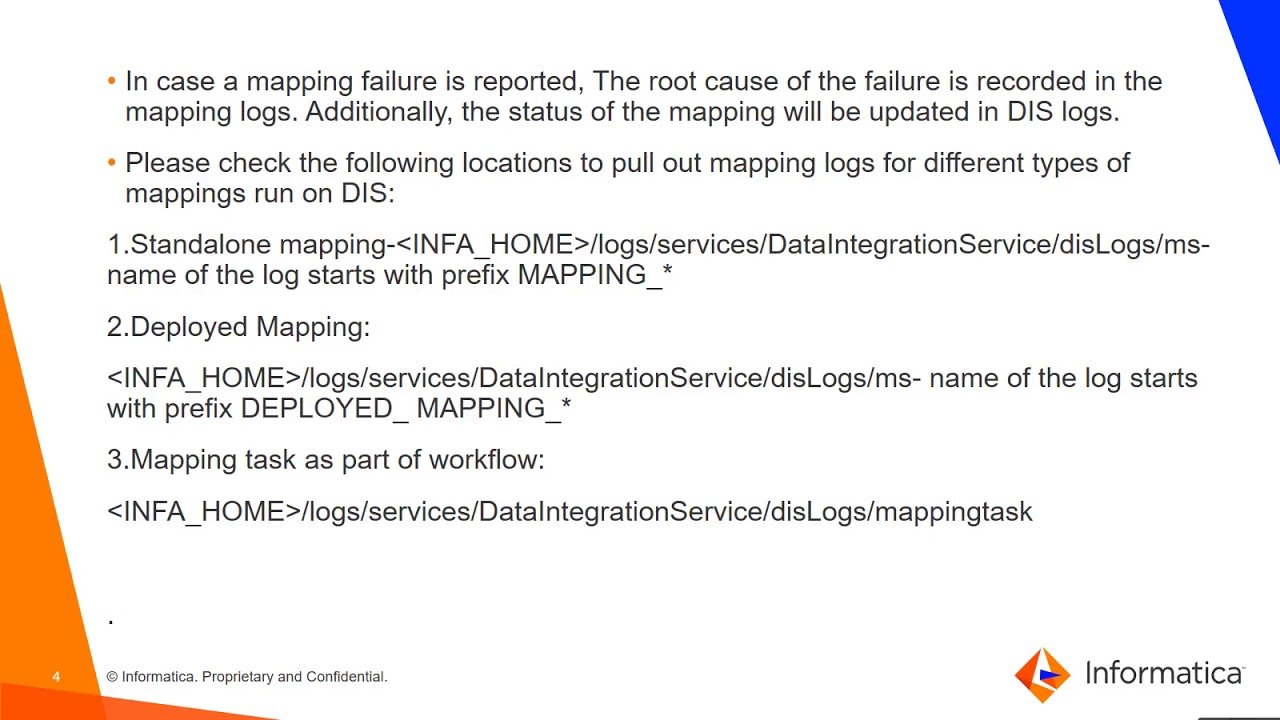

Mapping Failure on DIS

Top 10 Vitamin B12 Rich Foods | Cobalamin Rich Foods (For Healthy blood and Nerve cells)

Kenapa Harga Barang Selalu Naik? (Penjelasan Inflasi)

How to Prevent Getting CockBlocked By Her Friends