Scaling and Advancements in Large Language Models (in 2023)

Insights on the importance of scale and the emergence of new capabilities in large language models in 2023.

00:00:00 Large language models in 2023: insights on the importance of scale and the emergence of new capabilities. Researchers should adopt a dynamic perspective and constantly unlearn outdated ideas.

🔑 Large language models have unique abilities that only emerge at a certain scale.

🔍 It's important to have a different perspective when viewing the field of language models.

🔄 Constantly unlearning invalidated ideas and updating intuition is crucial in this dynamic field.

00:07:03 Large Language Models use the Transformer architecture for sequence to sequence mapping. Scaling involves efficiently distributing matrices to machines while minimizing communication. Matrix multiplication is done in a scalable manner using abstraction over hardware.

🔍 Large language models use the Transformer architecture, which is a sequence-to-sequence mapping.

💡 The Transformer allows tokens in a sequence to interact with each other through dot product operations.

⚙️ Scaling the Transformer involves efficiently distributing the matrices involved in the computation across multiple machines.

00:14:08 Large Language Models in 2023: Explaining the parallelization process in matrix multiplication and its application in the self-attention layer of Transformers for data and model parallelism across multiple machines.

🔑 Large language models use parallelization to speed up computation.

🔑 Einstein summation notation can be used to express array computations.

🔑 Parallelization can be applied to transformer models for efficient training.

00:21:13 The video discusses the process of scaling large language models and the challenges associated with it. It also emphasizes the importance of post-training research on top of the engineering work.

📚 Large language models rely on parallelized decorators and a compiler-based approach to scale and train neural nets.

🔧 Scaling language models requires engineering hardware and dealing with the challenges of expensive iterations and decision-making processes.

📈 Scaling laws and understanding performance extrapolation are critical in pre-training large language models.

⚙️ Scaling language models is still challenging and requires continuous research and problem-solving beyond engineering issues.

00:28:17 Large Language Models (in 2023): Language models have limitations in generating concise and accurate responses. Instruction fine-tuning improves task understanding and generalization, but adding more tasks has diminishing returns.

🔑 Pre-trained models have limitations in generating crisp answers to specific questions and often generate natural continuations even for harmful prompts.

💡 Instruction fine-tuning is a technique where tasks are framed as natural language instructions and models are trained to understand and perform them.

📈 Increasing the diversity of tasks during fine-tuning improves model performance, but there are diminishing returns beyond a certain point.

00:35:21 Large Language Models (in 2023): Exploring the limitations and challenges of using supervised learning as the learning objective for large language models, and introducing the use of reward models and comparison-based learning as an alternative approach.

✨ Large language models are effective but have inherent limitations in supervised learning with cross-entropy loss.

🤔 Formalizing correct behavior for a given input becomes more difficult, leading to ambiguity in finding a single correct answer.

⚙️ The objective function of maximum likelihood may not be expressive enough for teaching models abstract and ambiguous behaviors.

00:42:27 A high-level overview of large language models and their potential in AI. Discusses the use of reward models and the challenges of reward hacking. Explains why studying RL is important despite its difficulties. Proposes learning the objective function as the next-generation paradigm.

📌 Large language models are trained using a trial and error approach where a policy model is given a prompt and a reward model evaluates the generated output.

🔍 Reward hacking is a common failure mode in training language models, where the model exploits the preference for longer completions to maximize rewards.

🧠 Studying reinforcement learning is important because maximum likelihood is a strong inductive bias and may not scale well with larger models.

🔬 The next paradigm in AI could involve learning the loss function or objective function, which has shown promise in recent models.

You might also like...

Read more on People & Blogs

How Many Moons Could Earth Handle? #kurzgesagt #shorts

Buk3le: La mano dura funciona así los progr3s digan lo contrario

Discipline Is Actually An Emotion

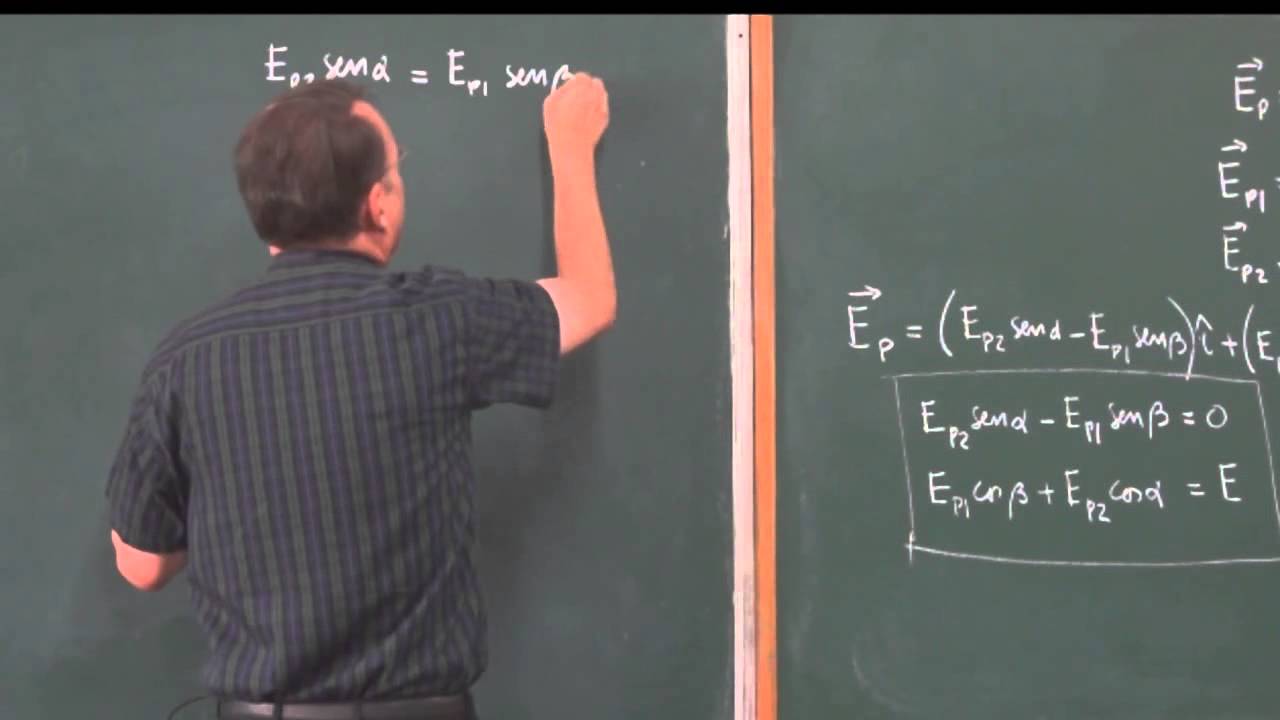

Clase 12: Problema 5; Cálculo de la magnitud del campo eléctrico debido a dos cargas.

Curso virtual inmersivo de Campo - Módulo 10

Manipulación y el poder de las emociones | DW Documental